Agentic AI has generated considerable excitement as the next big thing. But how soon is “next”? The potential of the new technology is clear: agentic AI systems make it possible to combine LLM capabilities with code, data sources, and user interfaces to achieve complex tasks and workflows. This goes far beyond the traditional “prompt and response” mode of generative AI. Simply put, agents don’t just give answers—they can also take action.

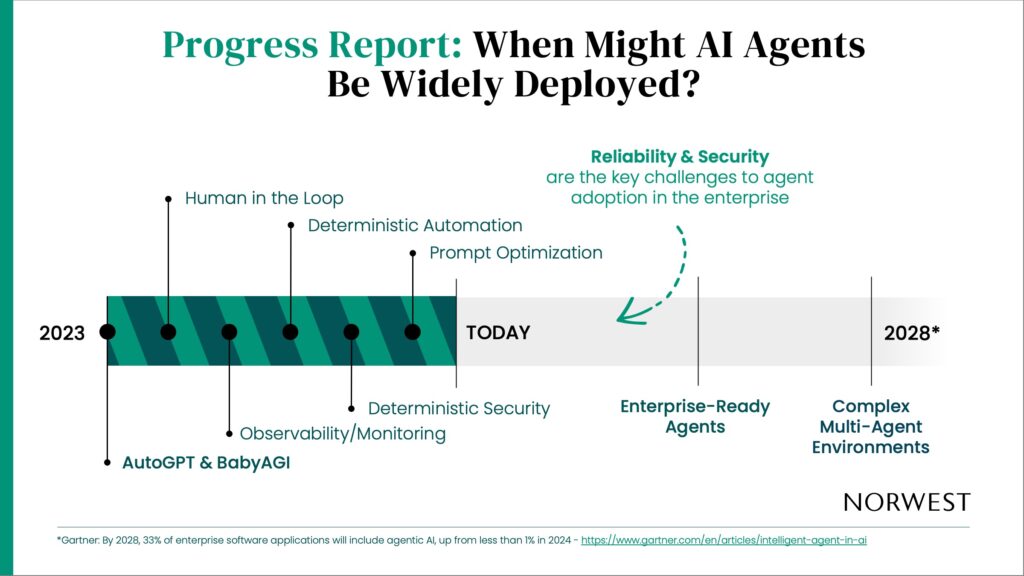

What’s less clear is how much progress has been made toward widespread enterprise adoption of production-ready autonomous AI agents. Gartner predicts that by 2028 agents will be deeply embedded within the broader software stack, with one-third of enterprise software applications including agentic AI. But how close is the current technology to earning the right to be deployed?

To gain clarity on the promise, current state, and possible future of this transformative technology, we are constantly speaking with founders in the agentic AI space and the enterprise AI practitioners who would be their customers. Here are some of our key observations and answers to the most common questions surrounding AI agents.

Are Today’s Agents More Than Just Thin Wrappers on LLMs?

The March 2023 release of AutoGPT and BabyAGI hinted at the future of what’s possible to achieve with large language models (LLMs). As they spread virally across GitHub, the open-source projects sparked a wave of development activity and innovation. After diving deeper with emerging players in the category, we see a huge opportunity for agentic AI offerings that can prove to be meaningful advancements from existing LLM capabilities. That means being more than just thin wrappers like those generative AI copilots that allow a chat-based LLM to run alongside an application but don’t necessarily enhance its capabilities.

The differentiation of autonomous AI agents from thin wrappers is essential to proving both their value and their readiness for widespread enterprise adoption. After all, many of the enterprise technology leaders and practitioners we spoke with told us they were actively researching agents and demoing or piloting agentic AI solutions, but few had much to say about successful production deployment.

To better understand the market landscape of agentic AI, we developed a simple framework for evaluating the differentiation of agents in their current state.

- Use cases: Enterprise adoption depends on a clear business case, not just innovation for its own sake. Are agents being used for meaningfully more sophisticated and specific AI use cases than could be addressed with a foundation model like GPT-4o1? Have they moved past the question-and-answer interaction mode?

- Deterministic safeguards: Businesses are rightly wary of the risks that can come with early AI adoption. Trustworthy AI is even more critical when an LLM powers business process automation. Are there safeguards and guardrails in place to prevent agents from hallucinating in production environments? Will intra-process agent mistakes be observable?

How Are Agentic Companies Building Trust?

Autonomous AI agents must be trustworthy given their potential role in automating mission-critical business processes. Today, two key factors give enterprises pause:

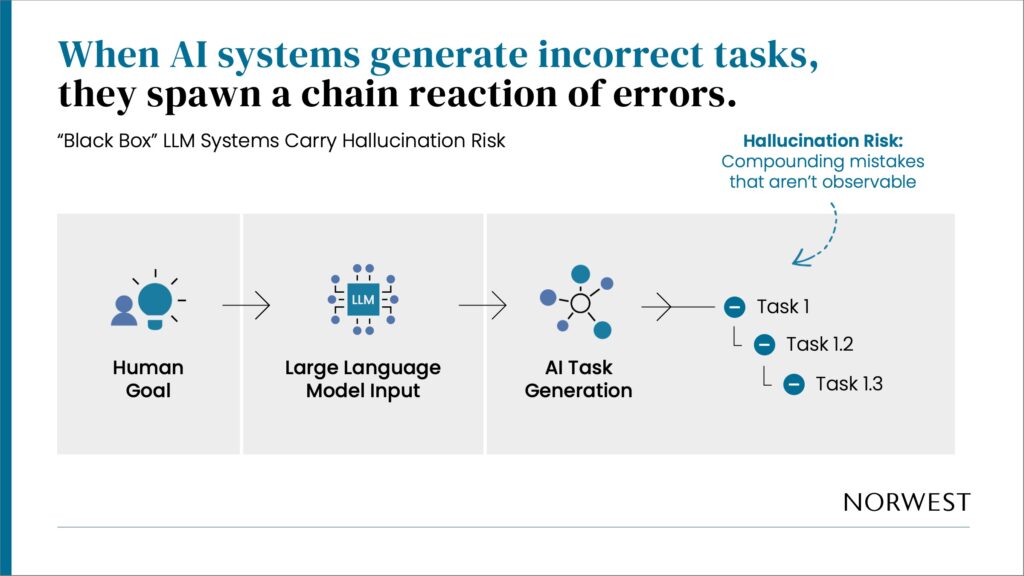

- LLMs, the brains of AI agents, are non-deterministic systems—essentially black boxes that make it difficult for human users and engineers to determine when an agent has achieved its goal and whether it performed as expected.

- LLMs still face issues with hallucination, where the model output is fabricated and not consistent with the provided context or the world knowledge of the model. In agentic systems where LLMs are creating the sub-tasks to achieve their goals, underlying hallucinations can lead to compounding mistakes in agent behavior as they are carried through the workflow.

Enterprises will vet agents carefully to ensure that appropriate guardrails are in place to mitigate these pervasive hallucinations.

The companies we’ve spoken with and evaluated have shared a number of different approaches and playbooks they use to ensure that hallucinations, errors, and sub-optimal choices don’t compromise production environments. These include:

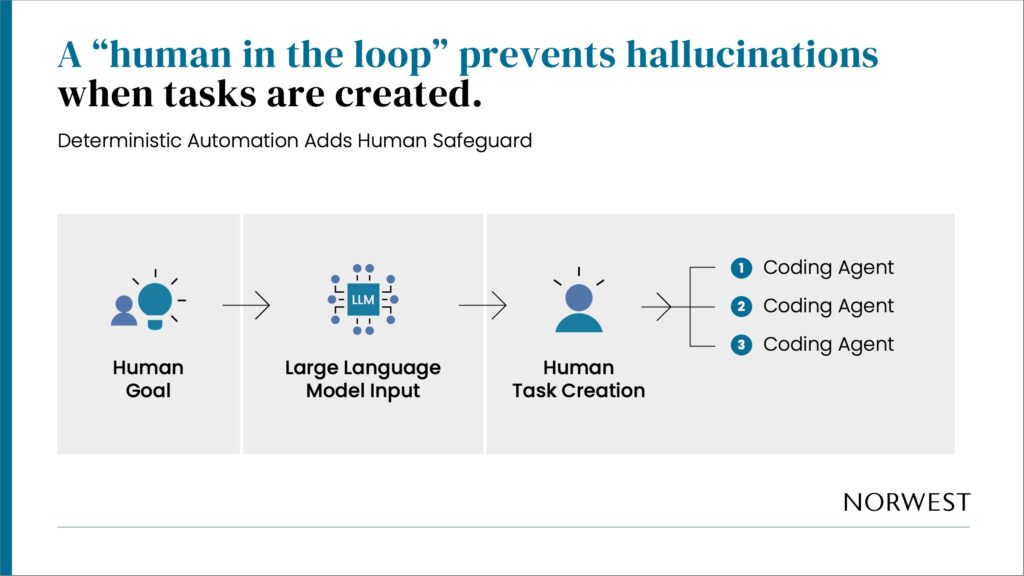

- Deterministic automation, a.k.a. “human in the loop”: Each agent deployment involves dedicated engineers and product managers who translate the customer journey design into agent code. The resulting agent conversations are closely reviewed and corrected by human practitioners.

Deterministic automation adds human safeguard to prevent hallucinations - Deterministic security: The company defines a security policy and configures a rules engine to validate that the agent’s actions are secure.

- Agent logging and observability: Analyzing agent traces can help companies learn more about where an agent succeeds and where it fails, such as when it gets stuck in a loop and endlessly repeats the same actions. Based on this knowledge, agent engineers can fix the agent’s instruction-following capabilities to prevent the error from reoccurring. We see this function being a key driver of successful adoption in enterprise environments (more on that in the next section).

- Prompt optimization: One way to address hallucinations is to improve and modify agent prompts to ensure that only freshly extracted information is used, rather than memorized information that may be outdated, incorrect, fabricated, or misremembered.

What Comes After Agentic AI Is in Production?

Agent operations involve a sophisticated technology ecosystem, from application-layer agents and agent-enablement tools down to underlying infrastructure and services. As enterprises deploy more production-ready AI agents and move toward mature, complex multi-agent environments, observability and monitoring requirements will grow.

To oversee all this, organizations will need to create a new systems management and optimization role: the AI systems engineer. These next-gen QA engineers will continuously monitor agent interactions and fine tune their behavior for optimal effectiveness and error-free operations. The role will require deep knowledge of the technical mechanics of LLMs and retrieval-augmented generation (RAG) systems so practitioners can orchestrate those systems.

In multi-agent environments, AI agents will be interacting and upgrading constantly, consuming a steady diet of new data to perform their individual jobs. When one of them gets bad data—intentionally or unintentionally—and changes its behavior, it can start performing its job incorrectly, even if it was doing it perfectly well just one day before. An error in one agent can then have a cascading effect that degrades the whole system.

Enterprises will hire as many AI systems engineers as it takes to keep that from happening—and the orchestration needed today is only in its infancy. To date, many layers of the agentic AI stack are in the early stages and largely composed of open-source projects. This is especially true for multi-agent protocols, communication schema, and monitoring and security tools.

In the future, when we do evolve to more sophisticated fine-tuning of LLMs, there will be levels of nuanced honing that we can’t even begin to imagine right now.

What Have We Learned?

Although the promise of agentic AI is undisputed, the category remains a work in progress. Founders have clear opportunities to develop defensibly differentiated solutions addressing high-value needs, particularly in vertical use cases and areas where gaps in the operational stack need to be filled.

For enterprises, agents represent the next step beyond generative AI, offering even greater productivity and efficiency through autonomous decisions and automated actions—provided the necessary safeguards are in place.

If you’re building one of those differentiated AI agent solutions or you just have thoughts on our perspective, reach out to Scott Beechuk or Bayan Alizadeh. We’re all ears.